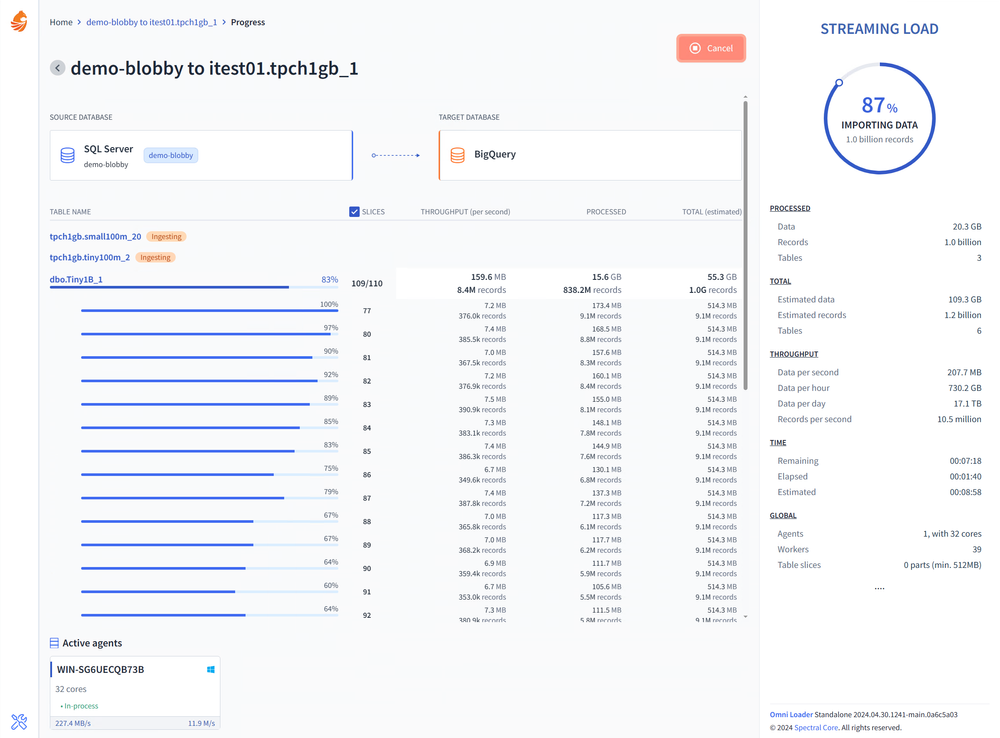

Omni Loader is an advanced database migration tool offering unmatched performance out of the box. It significantly reduces risks in large migration projects, effortlessly handling even 100 TB+ databases. It provides automatic vertical and effortless horizontal scaling. Setup time is completely eliminated due to its fully self-tuning engine.

Omni Loader is fully self-tuning and works great out of the box.

No configuration is needed. Omni Loader performs optimally with its default settings. However, Option controls are available if you need to customize the default behavior.

Customize schema mapping, table and column naming, or override data type mapping rules. Some databases contain critical tables with high data volumes that need special handling. You can adjust settings and override project-wide rules for any specific table that requires different treatment.

Connect to your databases and run the migration — as simple as that.

Easily copy data between on-prem or cloud databases, relational or data warehouses.

Even a single large table can be copied rapidly due to automated slicing.

Make advanced customizations quickly with flexible mapping rules.

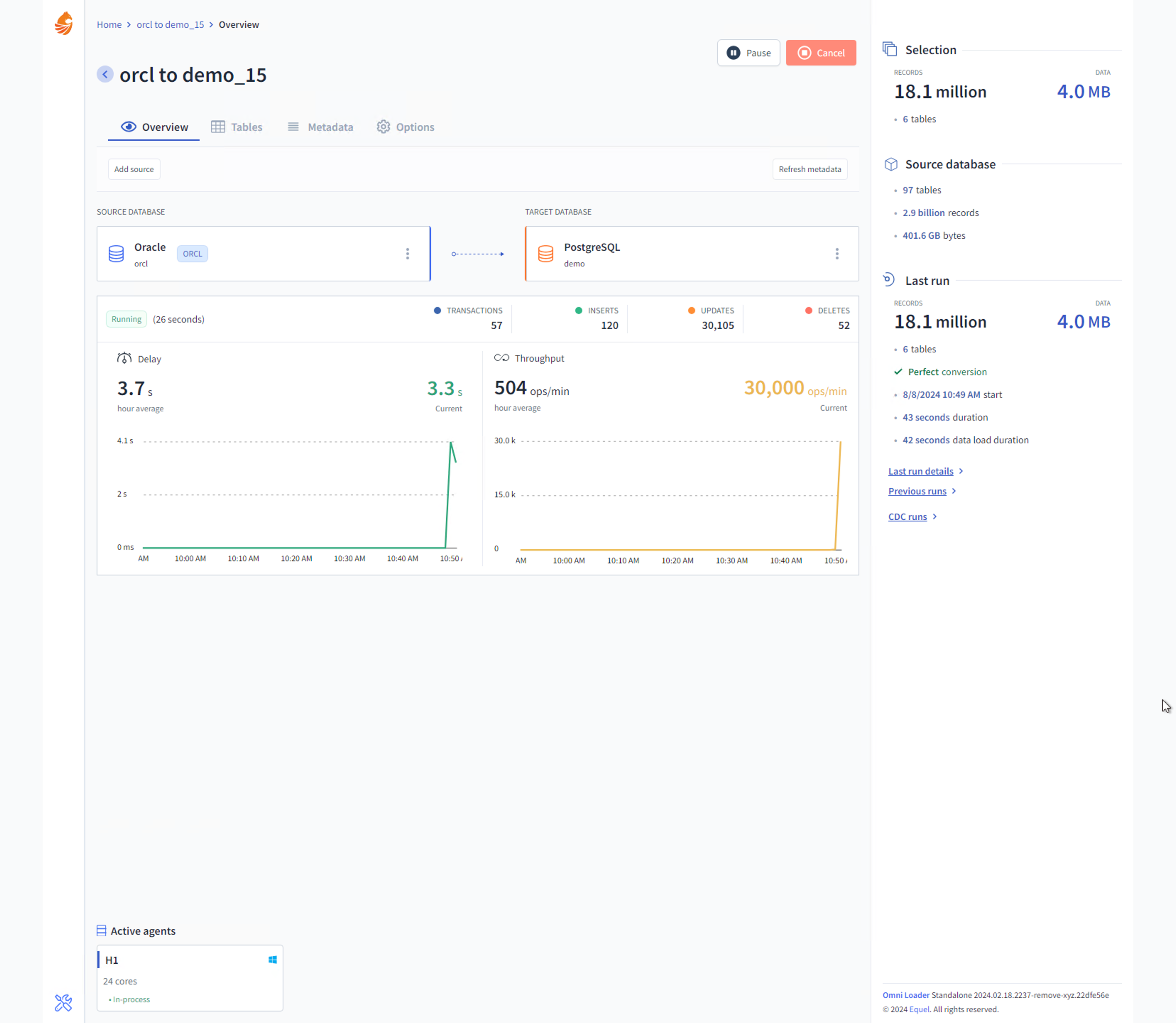

Databases and tables are automatically set up for CDC with no need to run separate scripts.

In CDC projects, we stream transactions to any relational target server in near-realtime.

Our massive parallel data migration engine is used to rapidly initialize the target database.

.png)

There is no limit to the number of source databases data is copied from. You can use multiple database types as well.

Group tables from a single database into a specific schema, or use our schema mapping feature to arrange data as you like.

The workflow when you have a single source database or twenty is exactly the same.

Over 30 database types supported. OLTP and OLAP, hosted on-premises and on the cloud.

We are an independent software vendor specializing in database solutions.

Allow us to help you get your data migration job done as quickly as possible.